To understand the problem with public examinations we need to understand the different objectives they serve and how they interact and conflict with each other, says TCA Anant.

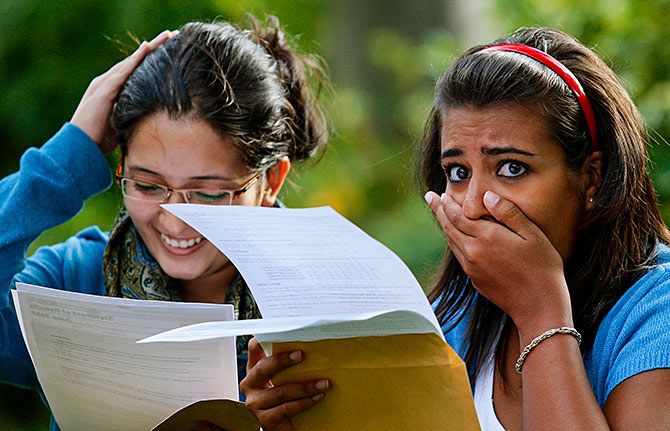

Photograph*: Phil Noble/Reuters

Summer brings with it mngos, the summer loo, iced banta and, of course, examinations.

The Central Board of Secondary Education (CBSE) has released their results; different state boards have been announcing results every other day.

Delhi University is gearing up for its annual admission examinations, and similarly, there is NEET, CLAT, IIT-JEE and so on.

Newspaper columns focus on various issues such as the problem of grade inflation, the impossibly high cutoffs for admission to the good colleges, the conflicting and impossible schedules of entrance examinations and so on.

What is lost in the noise, however, is an understanding of what purpose it is that examinations should serve, what are their inherent limits and what those limits mean for education policy.

To understand the problem with public examinations we need to understand the different objectives they serve and how they interact and conflict with each other.

Broadly speaking examinations are used with three different objectives.

The first is a threshold objective, which seeks to describe the level of attainment of any student, and most importantly this objective seeks to identify those who meet the desired minimum level of attainment at this level of education.

The second objective is for higher level institutions (i.e. colleges and universities) that wish to select from the students who have met the minimum level of attainment. This selection is constrained by the intake capacity of the institution.

Thus, Delhi University or the IITs will seek to admit the best from amongst those eligible to seek admission. They, therefore, are interested in a ranking of students.

Finally, we have the makers and researchers of education policy who seek to evaluate the overall education system by using the performance in these exams as the basis to study how well the system has delivered.

Grade inflation occurs in large part because lower level institutions (i.e. schools) seek to privilege their own students in the entry competition and simultaneously present an attractive performance picture for themselves.

Grade inflation presents a peculiar challenge for the higher institution because it finds its selection being squeezed in an ever narrower band.

The media is routinely full of stories when elite Delhi colleges closed their first lists at 100 per cent marks.

This dilemma of selection, in turn, forces many higher level institutions to device their own entrance tests.

The growth in specialised entrance tests then fuels the growth of a service industry to coach students to prepare solely for entrance tests. This then leads to another perverse cycle of incentives where students focus on the coaching institution, comforted in large part that they will meet the minimum threshold due to grade inflation.

As more institutions adopt entrance tests, it creates issues of multiplicity and conflicting schedules, which then leads to the creation of centralised encompassing tests such as NEET, further privileging the coaching industry.

Since an entrance examination focuses on a limited part of the preparatory curriculum, the higher level institution faces a peculiar paradox that candidates successful in the entrance test often lack the requisite preparatory training.

The response to this problem has been through a variety of ad hoc solutions. Thus, for example, in the case of IIT admissions, it was decided to effectively raise the eligibility threshold in the qualifying examination to be eligible for consideration.

This is done by breaking the exam in two parts, with the top 2,00,000 odd students in the Joint Entrance Examination (JEE) main merit list who are also in top 20 percentile of their respective qualifying exam being allowed to take the JEE advanced exam.

In a different way, recently, a similar problem seems to be highlighted with the Union Public Service Commission (UPSC) results.

Here the problem appears to be that there is a poor correlation between exam results and subsequent performance in the foundation course.

This has led to a suggested proposal where the ranking of successful candidates is based on a composite of exam scores and post-exam performance in the foundation course. There is a huge debate essentially concerned about the lack of transparency in such a process!

What gets lost, however, in the entire discussion is that exams are, in general, very limited in their ability to assess capabilities.

Composite assessment procedures which combine information from a variety of sources and gathered over a period of time have a much better track record.

The problem is that these are cumbersome to implement and do not lend themselves to simple standardisation. They implicitly require trust in the selection/admission agency and extending to them wide latitude in the process of admission.

It is worth noting that globally such centralised exams are in disrepute and most top-level universities, and for that matter job selection agencies are resorting to composite selection procedures.

It is ironical that while on the one hand, we wish to create institutes of excellence which will rank amongst the best in the world but we do not wish to extend to our own institutions the features which would allow them to replicate practices being followed in these excellent institutions.

In order to truly create centres of excellence in education, there needs to be a paradigm shift in our approach to and expectations of examinations; and more importantly, move to build institutions based on trust.

The writer is former chief statistician of India.

*Lead image used for representational purposes only.